(Quite) A Few Words About Async

I’ve had a few conversations about async code recently (and not so recently) and seen some code that seems to make wrong assumptions about async, so I figured out it was time to have a serious chat about async, what it’s for, what it guarantees and what it doesn’t.

Most of the code in this entry will be written with Python syntax (and often Python libraries), but with a few minor exceptions, we’ll be discussing concepts that are valid across languages.

There’s more than one kind of performance

We all know about performance, right? I mean, there is some task we want our code to do (display something on screen, or request some data from a database, or download a file, or solve a mathematical problem, …) and that task should be finished as fast as possible. For bonus points, it should use as little memory as possible.

Right?

Well… not quite.

This kind of performance is called throughput. And having a high throughput is almost always a good thing. But in the 21st century, focusing on throughput is generally the wrong goal.

You see, these days, most applications1 look something like this:

while True:

while (event := get_next_event()):

on_event(event)

wait_until_there_is_an_event()Maybe you’re writing that loop yourself, but usually, not. You might

be writing asyncio.run or #[tokio::main] or Eio_main.run, or

just running your code in the browser or Node.js or BEAM,

or in plenty of other ways, but you’re not escaping the event loop.

If you’re writing a video game, you receive an event whenever the user presses a key, and also whenever it’s time to repaint the screen. If you’re writing a GUI tool, you receive an event whenever the user clicks on a button. If you’re writing a web server, you get an event whenever you receive a connection or some data.

And yes, you want to finish the task quickly. In fact, if you’re writing any kind of user-facing application, whether it’s a text processor or a video game, you have about 16ms to finish the task. You can do many things in 16ms. But there are also quite a few things that you can’t do, including opening a file 2 or getting a response from your web server 3.

If you’re writing a web server, you have more leeway. In most cases, you can afford to wait 1 second, possibly even 2. But there are plenty of tasks that your web server may need to complete and that will take more than 2 seconds. For instance, extracting lots of data from a busy database, or getting anything remotely coherent from a LLM.

So… now what?

Now we need to redefine performance. And in fact, there are plenty of definitions of performance. The one we’re going to focus on in this discussion latency: how long until something happens. You may not have finished opening your file, getting a response from your web server, or received anything that looks remotely coherent from Claude, but you need to respond something quickly.

Also, if you’re interested in the notion of Response Time Limits, it dates back to 1993: https://www.nngroup.com/articles/response-times-3-important-limits/. I seem to recall that Microsoft lead some further research when they were developing the original Surface tables (before the tablets). DoubleClick/Google also published some additional refinements in the specific case of web applications and mobile web applications. Sadly, I haven’t found the links.

The need for non-blocking code

Let’s start with a simple example:

class ComputeFibonacciEvent:

arg: int

def fibonacci(n: int) -> int:

if n <= 1:

return 1

return fibonacci(n - 1) + fibonacci(n - 2)

def on_event(event):

if isinstance(event, ComputeFibonacciEvent):

result = fibonacci(event.arg)

print(f"fibonacci({event.arg})={result}")

else:

...Yes, I’m very aware that you can rewrite Fibonacci in a more efficient manner, buy let’s keep this awfully inefficient implementation. Feel free to replace this with any other task that is slow to execute.

Now, does our event loop fit within our 16ms budget? For a sufficiently large

value of arg, it might not. But if we exceed our 16ms budget, we are blocking

our application from repainting the screen, or taking new HTTP requests, etc.

and that’s bad.

So how do we make our computation fit?

Well, there are many solutions, but all of them are variants around the following idea:

Make

fibonaccinon-blocking.

A few more definitions

Non-blocking is not the most common word you’ll find around the web. You’ll often read about asynchronous, concurrent, parallel. These are four distinct concepts that people tend to confuse hopelessly.

Code is non-blocking if it never blocks any critical thread.

In this conversation, we’re interested in the thread containing the event loop. Non-blocking is an objective. It’s also a guarantee provided by some functions in your libraries or your operating system.

How do you achieve this? Well, that’s what this entire post is all about.

Code is asynchronous if it structured to present explicit dependencies between executions.

Asynchronous is about code structure. Typically, this involves callbacks, or events, or some abstraction on top of them.

Asynchronous does NOT guarantee that your code is non-blocking. In fact, the only guarantee is that if you refactor your code to become non-blocking, you won’t break everything.

Code is concurrent if you can schedule independent tasks to run.

Concurrency is also a programming style. Concurrency does not guarantee when the tasks run. There are concurrency toolkits that simply wait until a task is complete before running the next one. There are also concurrency primitives that will interleave the execution of two concurrent tasks, attempting to ensure that each of them progresses regularly. If this is done automatically, this is called preemptive multitasking. If the code requires specific annotations, this is called cooperative multitasking. Most developers use the word “concurrent” only if it involves some kind of multitasking.

Concurrency also does not guarantee that an operation is non-blocking.

Concurrent and asynchronous are often confused, but they’re different things:

- code can be concurrent without being asynchronous, e.g. if you launch multiple tasks and synchronize them implicitly either by assuming run-to-completion or by using locks;

- code can be asynchronous without being concurrent, e.g. Haskell is all about letting you specify dependencies, but without

par, you can’t launch tasks.

Code is parallel if two tasks can run at the same physical instant.

Parallelism is a property of the language, operating system, hardware and system load. Code that is executed in parallel in one run could be executed sequentially in another.

If parallelism is guaranteed, then it can be used to guarantee that an operation is non-blocking.

Concurrent and parallel are also often confused, but they’re different things:

- code can be concurrent without being parallel, e.g. threads in pure Python will let you launch tasks, and only one of them will ever be executed at a time;

- it’s a bit of a stretch, but code could be parallel without being concurrent, e.g. if the garbage-collector or some tasks runs in parallel but the developer does not have access to primitives to launch additional parallel tasks.

The case for (and against) threads

We live in the 21st century, so (on most platforms) we have access to threads. Threads are always a mean to achieve concurrency and, depending on resource constraints and your programming language, may be a mean to achieve parallelism.

Let’s try to use them.

import threading

def on_event(event):

if isinstance(event, ComputeFibonacciEvent):

def background():

result = fibonacci(event.arg)

print(f"fibonacci({event.arg})={result}")

thread = threading.Thread(target=background)

thread.start()

else:

...Based on a quick benchmark, creating and launching each thread in Python takes about 6µs on my machine, so we’re well within budget. Yes, running fibonacci in the background can still take an arbitrary amount of time, but that’s expected. So… mission accomplished?

Well… yes and no.

If you look at your favorite web backend or video game or desktop application, you’ll see that this is not the solution that the developers have picked.

Threads are tricky

Part of it is the difficulty. Programming with threads has long been considered too difficult for mere mortals, with the need to use (and understand!) thread-safety, mutexes, atomic operations and, sometimes, thread-local storage. While the specific case in the example is trivially thread-safe, it is really easy to write code that appears thread-safe but relies on some well-hidden global state (as is very common in Python libraries, for instance). I have also, quite a few times, seen code misusing mutexes (typically by protecting the wrong variable or protecting it at the wrong moment, or sometimes by blocking the main thread with a mutex, hence making the entire exercise pointless) or atomicity (by mis-understanding the memory model), so yes, being wary of threads makes all sorts of sense.

In fact, to this day, I am not aware of any multi-threading safe GUI toolkit, and even languages that make it simple to write multi-threaded code, such as Go, do not make it simple to write correct multi-threaded code 4. Even the Rust stdlib got one function wrong for a long time.

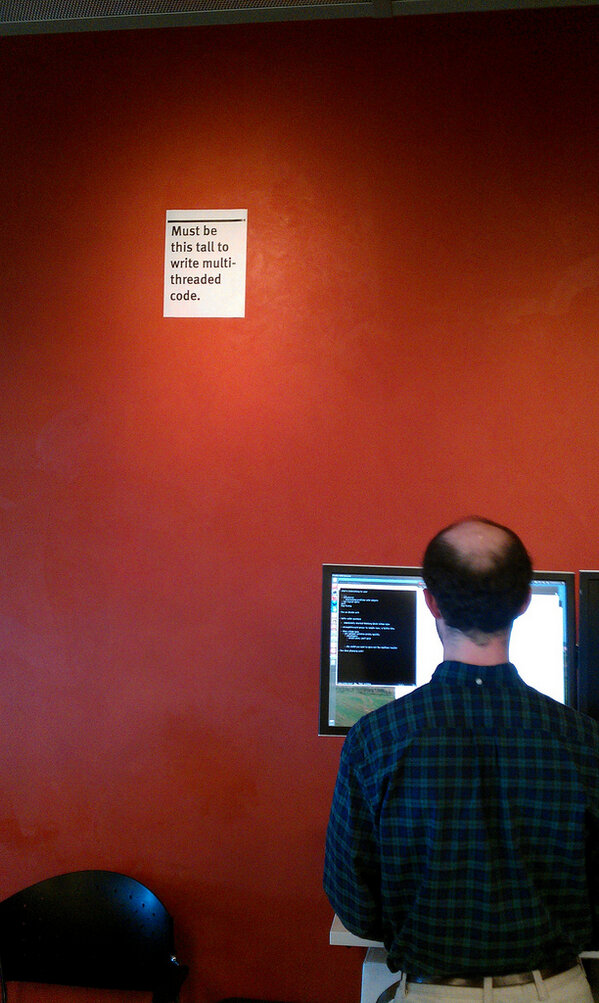

Or, in the words of David Baron:

By the way, I write that the snippet is trivially safe, but that’s actually not certain.

What happens if print is called by several threads at the same time? In such cases,

the original implementations of printf in C would cause all sorts of memory breakages.

Nowadays, it’s probably safe… but how do you check that? Rust explicitly uses locks

around stdout and stderr, to avoid any threading problems, but most other languages and

frameworks don’t.

Threads cost resources

Another reason is resource limitations. Each process can launch a finite number of threads. Each user can launch a finite number of threads. Each kernel can launch a finite number of threads. And when you’re writing and deploying your application, you often don’t know that number, which means that you can’t rely upon it: for all you know, your application will run without thread support (I have noticed this once with a Docker deployment).

Oh, and your threads typically eat some memory (8kb physical and 8Mb virtual on Linux, last time I checked), which contributes (a bit) to making threads a complicated proposition: if you launch a thread to make an operation non-blocking, and if launching threads may fail (sometimes in hard-to-catch fashion), how should you handle the case in which your thread launch fail? Can you even do it?

These resource limitations are the reason for which web backends cannot just rely on threads. Because it’s seldom a good idea to let your users (who could be malicious, or using buggy clients, or stampeding after an article on Hacker News) control how many resources you use.

Now, these resource limitations have been known and worked around for decades, through the use of thread pools:

from concurrent.futures import ThreadPoolExecutor

thread_pool = ThreadPoolExecutor() # Place a limit on the number of threads used.

def on_event(event):

if isinstance(event, ComputeFibonacciEvent):

def background():

result = fibonacci(event.arg)

print(f"fibonacci({event.arg})={result}")

thread_pool.submit(background)

else:

...And in many cases (again, assuming that your code is thread-safe), this works.

When doesn’t it work?

First, it generally doesn’t work if you’re writing a library. You don’t know the thread policy of your callers, so you could accidentally be introducing thread-unsafety in the caller’s code by using a thread pool, or multiplying the number of thread pools, hence breaking the constraint limits. Please don’t do that. If you wish to work with a thread pool, ask your client code to provide it.

The second problem appears if your threads are, for some reason, blocked. This can happen,

for instance, if any of your threads needs to access a database, or a remote server, if

you have any kind of call to sleep or if, for some reason, your print has been rerouted

to a file that is, for some reason slow. In such cases, can quickly saturate the thread

pool with threads doing nothing (well, waiting for completion of some activity that is not

controlled by your code). Any further task will just have to wait until one of the waiting

threads has finished its work.

In other words, you have completely lost throughput. If you’re writing a webserver, this suddenly means that you need more webservers to be able to serve all your users, which increases your cloud bills and the energy bill paid by the planet. If you’re writing a video game, sure, the framerate remains good, but the actions of the PCs and NPCs feel sluggish. If you’re writing a desktop app, sure, the UI remains responsive, but your users wait forever.

Threads may be GILed

Let’s get one nasty thing out of the way: if you’re writing Python or Ruby (or pretty old versions of OCaml), your threads are never, ever, going to run in parallel. That’s because these languages rely on a Global Interpreter Lock, designed specifically to make sure that only one thread is ever running at a given instant.

Why? Well, this makes the rest of the implementation of the language much, much simpler, in particular refcounting/garbage-collection. This also avoids lots of pitfalls that would make your life miserable. This also allows a number of optimizations (both in the VM/interpreter and by at user-level) that would become very much unsafe if the code truly ran in parallel.

Note that (at least in Python), native code (e.g. PyTorch, NumPy, any code written with PyO3, etc.) can release the GIL, which means that its content can run in background threads without blocking other threads. Most of the time, it’s a good thing, but if the developer of the native code doesn’t know what they’re doing, this can quickly lead to memory corruption.

What does this mean for performance? It means that if your code is not calling into code that releases the GIL, it’s always going to be quite slower in multi-threaded mode than in single-threaded mode. How do you know if code releases the GIL? Sadly, it’s pretty much never documented, so you have to experiment to find out.

Also, the case of OCaml demonstrates that you can bring an ecosystem from GIL-ed to fully multicore (OCaml ≥ 5), but suggests that it may take a pretty long time. Python seems to be slowly heading in this direction, but I don’t think we’ll see anything usable before 20305.

Threads are (kinda) slow

Operating System threads need to context-switch to provide preemptive multitasking, i.e. some thread runs on a CPU/core, then the thread is put on hold while some other code runs on that CPU/core. This is transparent to the user, but there is a cost.

Roughly speaking, to context-switch between two tasks, the OS scheduler will need to:

- interrupt the user-level code on that CPU and place the CPU into kernel mode;

- backup the registers, including the program counter;

- backup the pointers to the thread stack, the thread-local storage, etc;

- deactivate interrupt and signal-handling and backup their state;

- replace registers, pointers, interrupt handlers, signal handlers with those of the new thread;

- return to user land and resume execution of the code onto the CPU.

There is a cost to all this.

I’ve been too lazy to benchmark, but I’ve seen benchmarks on a machine more recent than mine that indicate a cost of 2-5µs during each context-switch. That’s time spent executing code that’s not serving your needs. If your code needs to context-switch 5000 times per core per second (that’s an arbitrary number), you have eaten 10-25ms of your budget just on context-switching, so that’s roughly equivalent to one frame per second which you may need to somehow compensate 6.

There are, of course, other costs to threads. Every lock you need to acquire/release has a raw synchronization cost, plus a contention cost. In particular, you very much want to avoid grabbing a lock on the main thread, as this makes thread synchronization a blocking operation. Even atomic operation you need to perform may have a performance cost, in particular on your cache. Etc.

In most cases, you probably don’t care. In particular, if you’re writing Python, you have bigger performance issues. However, if you’re writing performance-sensitive code (e.g. a video game, a video player, a browser), threads are not just part of the solution but also sometimes part of the performance problems you need to deal with.

What about green threads?

Green threads are threads implemented purely in user-land, i.e. they behave as threads but they don’t go through OS-level scheduling.

Scheduling between green threads is very similar to scheduling OS threads, but two things make it faster:

- scheduling doesn’t need to cross between user land and kernel;

- there are fewer things to backup and restore (in particular, generally no interrupt and signal handlers).

Similarly, lock synchronization may be faster.

On the other hand, pure green threads do not benefit from multiple cores or CPUs, which strongly decreases the usefulness of these threads.

Using pure green threads is rather uncommon these days. However, a few languages have so-called M:N schedulers, which combine green threads and OS threads. We’ll speak more of this in the section dedicated to Go.

So, threads?

In other words, while threads are a necessary component of any solution, they are not the magic bullet that we can hope.

The case for (and against) processes

For a long time, Linux did not support threads. This has never prevented developers from writing concurrent/parallel code. One of the workarounds for this lack of threads was to use multiple processes. Similarly, the solution to running code across multiple CPUs or cores in GIL-based languages has traditionally been to use multiple processes. In the 90s, OCaml even had a dialect called JoCaml, which featured a rather excellent paradigm for parallelism and distribution.

With a high-level API, spawning execution on a process is fairly simple:

from concurrent.futures import ProcessPoolExecutor

process_pool = ProcessPoolExecutor()

def on_event(event):

if isinstance(event, ComputeFibonacciEvent):

future = process_pool.submit(fibonacci, event.arg)

future.add_done_callback(lambda result: print(f"fibonacci({event.arg})={result}"))

else:

...This has immediate benefits: processes are not limited by a GIL, so code will typically run in parallel. Also, garbage-collectors are indendent across processes, so a slow garbage-collection on one process will generally not block another process.

There are a few drawbacks to the approach, though.

Processes are expensive

Each process runs its own copy of Python (or Ruby, or JavaScript, etc.), including an in-memory copy of not only the standard library, but every single dependency, a garbage-collector, etc. Also, if your language is JITed, each process runs its own JIT, which means its own copy of all the profiling data, and the optimized native code. The memory costs quickly add up.

In fact, one could argue that running multiple processes for a non-system language only makes sense if RAM is free and infinite.

Also, just as there are limits to the number of threads, there are limits to the number of processes.

Communications are expensive

Communications between threads is simple: you just send the data by passing references.

Communication between processes (aka Inter Process Communication or IPC), though? That’s another story. There are a few ways to do things.

You can use shared memory:

- process A serializes all the data it wishes to send to some local buffer;

- now that process A knows how much memory is needed to represent the data, it locks (internally) a segment of shared memory for this specific communication with process B;

- process A copies all this data to the shared memory segment;

- process A sends a signal to process B to inform process B that it should read the data (it’s a system call, so this needs to go through the kernel);

- process B receives a signal from process A (it’s an interrupt, so this may happen during garbage-collection for instance, or during a file access, etc. so the following steps may need to be delayed until garbage-collection is complete);

- process B finds the data in the shared memory and copies it to some local buffer;

- process B sends a signal to process A to inform process A that the data can now be deallocated (again, system call);

- process A receives the signal from process B;

- process A releases (internally) the locked segment;

- in parallel with 8-9, process B deserializes and checks for corruption the data receives from process A;

- process B has finally received the message.

Yeah, that’s not just complicated (that part is hidden from the user by a nice IPC library), it’s expensive.

You can simplify things quite a bit by going through sockets or pipes instead of shared memory, but at the expense of making more system calls, plus you’ll need to somehow make your pipe I/O non-blocking, which brings us back to our original problem.

Processes are (kinda) slow

Everything I wrote about threads being (kinda) slow? Well, all of this is true of processes, except that processes have way more data to save/restore in their Process Control Block: memory mappings, file descriptors, etc.

Also, locks between processes (which are fortunately needed much less often than locks within a process) typically go through the file system, so they’re a bit more expensive than locks between threads.

So, processes?

Processes are sometimes the right tool for the task, but their costs are steep, so you’ll need to be very careful about picking processes to solve your problem. Unless you only have access to processes, in which case… well, you don’t have a choice, do you?

Chunks

Threads and processes are a fairly high-level and expensive constructs. Perhaps we got off on the wrong

foot, and the right way to solve our problem is to approach it from the other end. What if we rewrote

our function fibonacci to have it compute things concurrently, manually making sure that

we never block the event loop?

This would, of course, be easier if our implementation wasn’t (non-tail) recursive, but this can be done.

@dataclass

class ComputeFibonacciEvent(BaseEvent):

"""

Event: We'd like to compute `fibonacci(arg)`.

"""

arg: int

"""

The value for which we wish to compute fibonacci.

"""

id: UUID

"""

A unique id for this event.

"""

parent_id: UUID | None

"""

If `None`, this is a toplevel request. Otherwise, the `id` of another

`ComputeFibonacciEvent` on behalf of which we're performing this

computation.

"""

@dataclass

class CompletedFibonacciEvent(BaseEvent):

"""

Event: We have finished computing `fibonacci(arg)`.

"""

arg: int

"""

The value for which we requested to compute fibonacci.

"""

parent_id: UUID | None

"""

If `None`, this was a toplevel request. Otherwise, the `id` of another

`ComputeFibonacciEvent` on behalf of which we're performing this

computation.

"""

result: int

"""

The value of `fibonacci(arg)`.

"""

@dataclass

class PendingFibonacci:

"""

Rendez-vous mechanism, holding the pending or partial state

of computing `fibonacci(arg)`.

"""

arg: int

"""

The value for which we requested to compute fibonacci.

"""

parent_id: UUID | None

"""

If `None`, this was a toplevel request. Otherwise, the `id` of another

`ComputeFibonacciEvent` on behalf of which we're performing this

computation.

"""

first: int | None = None

"""

If `None`, we haven't computed `fibonacci(arg - 1)` yet. Otherwise,

the value of `fibonacci(arg - 1)`.

"""

pending_fibonaccis: dict[UUID, PendingFibonacci] = dict()

"""

A mapping of event id => PendingFibonacci.

"""

def handle_event(event: BaseEvent):

if isinstance(event, ComputeFibonacciEvent):

if event.arg <= 1:

event_queue.put(CompletedFibonacciEvent(

parent_id=event.parent_id,

result=1,

arg=event.arg,

))

else:

# Enqueue the left and right computations.

event_queue.put(ComputeFibonacciEvent(

id = uuid4(),

parent_id=event.id,

arg = event.arg - 1,

))

event_queue.put(ComputeFibonacciEvent(

id = uuid4(),

parent_id=event.id,

arg = event.arg - 2,

))

# Store what we need to propagate the result.

pending_fibonaccis[event.id] = PendingFibonacci(

parent_id=event.parent_id,

arg=event.arg,

)

elif isinstance(event, CompletedFibonacciEvent):

pending = pending_fibonaccis[event.parent_id]

if pending.first is None:

pending.first = event.result

# We still need to wait for the second computation.

else:

# We have obtained both computations.

result = pending.first = event.result

if pending.parent_id is None:

#... and we're done!

print(f"fibonacci({event.arg}) = {result}")

else:

#...continue popping!

event_queue.put(CompletedFibonacciEvent(

parent_id=pending.parent,

result=result,

arg=event.arg

))Ouch. That’s… quite a rewrite. We just turned a trivial four-lines function into a 180 lines, impossible-to-debug, monster.

But this, as a mechanism, works. At each step of the event loop, the computation

is trivial and non-blocking. Alright, I’m cheating a bit: the call to

print remains blocking, but you could also rewrite it into a sequence

of non-blocking calls. In fact, if you look at Firefox or nginx, for

instance, you’ll find plenty of code written like this, usually

placing requests for long external operations (for instance, writing to

the database or to the network), then waking up once the request has

progressed to the next step, enqueuing the next step of the work as

a new event, etc.

Of course, the code I’ve written above is not nearly thread-safe. It could be made thread-safe, and hopefully take advantage of parallelism, at some cost in terms of throughput.

But before we start thinking about parallelism, let’s see how we can improve that monster.

Continuation-passing style (CPS)

Continuation-passing style is a programming style in which functions never return a result. Instead, each function receives as (usually last) argument a closure (the “continuation”) with instructions on what to do with the result.

If you have ever programmed with old-style Node, that’s exactly how it used to work. If you have ever programmed with monads, this involves the same idea.

So, let’s rewrite our code to use CPS:

def fibonacci_cps(n: int, then: Callable[[int]]):

"""

Compute `fibonacci(n)`, then call `then`.

"""

if n <= 1:

return then(1)

# Once we have the result of `fibonacci(n - 1)`, compute

# `fibonacci(n - 2)`, then sum both.

def with_left(left: int):

fibonacci_cps(

n=n - 2,

then=lambda right: then(left + right)

)

# Compute `fibonacci(n- 1)`.

fibonacci_cps(

n=n - 1,

then=with_left)

def handle_event(event: BaseEvent):

if isinstance(event, ComputeFibonacciEvent):

fibonacci_cps(event.arg, lambda result: print(f"fibonacci({event.arg})={result}"))

elif isinstance(event, SleepEvent):

event.thunk()That’s nicer. So far, it’s blocking, but it’s nicer.

Now, by moving to CPS, we have removed the need to return, which means that

we can delay computation. For instance, without breaking the rest of the code,

we can add wait instructions in the middle of fibonacci_cps.

First, let’s add some general-purpose support code:

@dataclass

class SleepEvent(BaseEvent):

"""

Wait until the next tick of the event loop before running `thunk`.

"""

thunk: Callable[[]]

def wait[T](continuation: Callable[[T]]) -> Callable[[T]]:

"""

Wait until the next tick of the event loop before running `continuation`.

"""

def result(arg: T):

def thunk() -> None:

return continuation(arg)

event_queue.put(SleepEvent(

thunk=thunk

))

return result

def handle_event(event: BaseEvent):

if isinstance(event, SleepEvent):

event.thunk()

else

# ... As previouslyOnce we have this, we may rewrite fibonacci_cps as follows:

def fibonacci_cps(n: int, then: Callable[[int]]):

"""

Compute `fibonacci(n)`, then call `then`.

"""

if n <= 1:

return wait(then)(1)

# Once we have the result of `fibonacci(n - 1)`, compute

# `fibonacci(n - 2)`, then sum both.

def with_left(left: int):

fibonacci_cps(

n=n - 2,

then=lambda right: wait(then)(left + right)

)

# Compute `fibonacci(n- 1)`.

def compute_left():

fibonacci_cps(

n=n - 1,

then=with_left)

wait(compute_left)…and with this, we have made our code non-blocking. And of course, wait and SleepEvent

can be reused for any other CPS function. We could go further and customize just how many

ticks we wait, or dispatch tasks to various CPUs if we wanted.

If you recall our earlier definitions, writing our code as CPS makes it asynchronous. And we just demonstrated how to refactor our asynchronous code to also be non-blocking.

CPS calls are the real reason for which Node.js was initially lauded as “fast”. It wasn’t about CPU speed, but about the fact that, thanks to CPS, you can run (literally) millions of concurrent tasks, especially tasks that spend most of their time waiting for network or database read/writes, without taxing the CPU too much.

So far, so good. Of course, we have still increased the code size for fibonacci from 4

lines to 18 and made it harder to read. Also, if you’re interested in performance, we

have allocated a lot of closures, which we’re going to pay in garbage-collection time.

Can we do better?

Generators / iterators

Generators are function-like objects that can be called repeatedly to return a succession

of values. From the point of view of languages that support CPS natively (more precisely,

languages that support call/cc or delimcc), generators are simple abstractions upon

simple use cases for continuations.

As it turns out, generators have been used in several languages and frameworks to achieve concurrency.

Let’s start with some support code:

@dataclass

class ContinueEvent(BaseEvent):

"""

Schedule an execution for the next tick of the event loop.

"""

generator: Generator[None, None, None]

def handle_event(event: BaseEvent):

if isinstance(event, ComputeFibonacciEvent):

# Start computation.

def generator() -> Generator[None, None, None]:

fibo = fibonacci(event.n)

try:

while True:

next(fibo)

# Not ready yet.

yield None

except StopIteration as e:

result: int = e.value

print(f"fibonacci{event.n}={result}")

event_queue.put(ContinueEvent(generator()))

elif isinstance(event, ContinueEvent):

# Continue computation

try:

# Are we done yet?

next(event.generator)

# Continue next tick.

event_queue.put(event)

except StopIteration:

# Done

pass

else:

raise NotImplementedErrorThe general idea is that handle_event receives instances of ContinueEvent and keeps

calling next(event.generator). If next(event.generator) returns (without raising), it

means that the code requested a pause. For our implementation of Fibonacci’s function,

this will happen because we decided to break the function call into several non-blocking

segments, but for anything involving, say, network calls, it will mean that the network

call isn’t finished yet.

In practice, here’s our new version of fibonacci:

def fibonacci(n: int) -> Generator[None, None, int]:

"""

An implementation of fibonacci.

Yields None to reschedule the computation to the next tick of the event loop.

After that, returns `int` with the result of `fibonacci(n)`.

"""

if n <= 1:

return 1

yield None # Take a break.

waiting_left = fibonacci(n - 1)

try:

while True:

next(waiting_left)

# Not `StopIteration` raised, which means we need to take a break.

yield None

except StopIteration as e:

left: int = e.value

waiting_right = fibonacci(n - 2)

try:

while True:

next(waiting_right)

# Not `StopIteration` raised, which means we need to take a break.

yield None

except StopIteration as e:

right:int = e.value

return left + rightAlright, it is still pretty long, but it is quite readable, in a Go style of things, with lots of easy-to-skip copy & paste code. In fact, if we had some syntactic support, we could imagine rewriting this entire function as:

def fibonacci(n: int) -> Generator[None, None, int]:

"""

An implementation of fibonacci.

Yields None to reschedule the computation to the next tick of the event loop.

After that, returns `int` with the result of `fibonacci(n)`.

"""

if n <= 1:

return 1

yield None # Take a break.

left = await fibonacci(n - 1) # Pseudo-syntax.

right = await fibonacci(n - 2) # Pseudo-syntax.

return left + right…but let’s not get too much ahead of ourselves.

What are the benefits?

- The transformation from our original

fibonacciis trivial and, in fact, mostly automatizable. - In many cases, this transformation can be optimized by a compiler, to have small to no overhead, by opposition to CPS.

- It is easy to add or remove

yield None, which in turn means that you can control context-switches, good both for performance and to avoid multi-threading pitfalls. Sadly, there is a non-trivial cognitive cost in most languages. From the languages I know, only Rust manages to offload (most of) the cognitive cost of paying attention to race conditions onto the compiler. - Writing an implementation of

ContinueEventthat dispatches tasks to multiple CPUs is quite simple.

What are the downsides?

- We’re still allocating memory dynamically, which isn’t great wrt performance.

- There are still no guarantees about being non-blocking. In particular, if we

forget to call

yield None, our entire transformation will be pointless.

Note that this rewrite as generators is, once again, a concurrent rewrite, which makes no guarantee about non-blocking.

Can we do better?

A small JavaScript aside

In JavaScript/TypeScript, these days, instead of CPS or generators to achieve concurrency,

developers tend to use Promise. While JavaScript supports generators, the need to make

JavaScript code non-blocking (and in particular, historically, the JavaScript code that

powers the user interface of Firefox) predates the implementation of generators in the

language.

Without syntactic support, the implementation of Fibonacci would look more like

/**

* Sleep a few milliseconds. Non-blocking.

*/

function sleep(ms: number): Promise<void> {

return new Promise((then) => setTimeout(then, ms));

}

function fibonacci(n: number): Promise<number> {

if (n <= 1) {

return Promise.resolve(1);

}

return sleep(0).then(() => {

return fibonacci(n - 1).then((left) => {

return fibonacci(n - 2).then((right) => {

return left + right

})

})

});

}which is essentially a higher-level API on top of CPS.

In practice, JavaScript has a double event loop, with Promise.then() being resolved

in the inner event loop (micro-ticks) and events (including setTimeout) being resolved

in the outer event loop (ticks). This simplifies considerably some user-facing APIs,

but we don’t need to enter the details here.

This formulation is a bit easier to read than CPS, plus provides a better path for error-handling (not displayed here), but still a bit hard on the eyes and also requires plenty of allocations. Also, in terms of performance, this would not be very friendly to multi-threading. Since JavaScript does not support what we usually call multi-threading 7, that’s ok for JavaScript, but explains why the same solution isn’t pushed forward in other languages.

So, the question remains: can we do better?

async/await

Well, yes, we can. In fact, in Python or Rust, I don’t think I’ve ever seen application code written by human beings in the above style (I have seen much in JavaScript applications and some as part of Python or Rust libraries and frameworks, though). What we do, instead, is introduce some syntactic sugar that lets us write

async def fibonacci(n: int) -> int:

"""

An implementation of fibonacci.

Yields back time to the scheduler at each non-trivial recursive call.

"""

if n <= 1:

return 1

await asyncio.sleep(0) # Take a break.

left = await fibonacci(n - 1)

right = await fibonacci(n - 2)

return left + rightThis, to a very close approximation, is syntactic sugar for the code we wrote above.

You can run it with asyncio.run, the de facto standard executor for async/await.

async/await in Rust

The code is quite similar in Rust, and will compile essentially to the same

loop with yield as in Python:

async fn fibonacci(n: u32) -> u64 {

if n <= 1 {

return 1

}

tokio::time::sleep(Duration::new(0, 0)).await; // Take a break.

let left = Box::pin(fibonacci(n - 1)).await; // We can't store recursive calls on the fixed-size pseudo-stack, so we need to allocate memory.

let right = Box::pin(fibonacci(n - 2)).await; // Since we'll rewrite it, we also want it to remain in place (hence the `pin`).

left + right

}Interestingly, as of this writing, yield is not part of Rust’s surface level language.

However, it is used internally for this purpose. The generator will, in turn, compile

to a much more complicated finite state machine which we won’t detail here (if you want to look at it, see this playground and click “MIR” instead of “Build”). This Rust code

is, of course, thread-safe, and will in fact be dispatched to available CPUs if you execute it with

tokio, the de facto standard executor for async/await on non-embedded platforms.

Note that while both Rust and Python compile to similar-looking generators,

there are quite a few differences in the underlying machinery, besides threads and

in-memory representation. In particular, where the Python executor needs to poll

by calling the generator (Awaitable) repeatedly until it eventually advances, the Rust executor

expects the be informed by the generator (Future) once it is ready to be polled once again.

Rust also supports canceling futures.

async/await in JavaScript

The surface-level JavaScript/TypeScript code is, again, quite similar, and will compile to

the Promise-based code above:

/**

* Sleep a few milliseconds. Non-blocking.

*/

function sleep(ms: number): Promise<void> {

return new Promise((then) => setTimeout(then, ms));

}

async function fibonacci(n: number): Promise<number> {

if (n <= 1) {

return 1;

}

await sleep(0);

let left = await fibonacci(n - 1);

let right = await fibonacci(n - 2);

return left + right;

}This will execute with your browser or Node’s built-in executor.

Note that, despite similar surface-level syntax, the underlying behavior is quite different from either Rust or Python: Promises do not rely on the executor to be polled, put to sleep or awakened. Rather, Promises essentially schedule themselves.

Executors?

If you wonder what an executor is, well, it’s an event loop we

have been discussing throughout this piece, along with the basic events

required to handle async/await and generally whatever you need to

build your own event loop on top of it if you need one.

The case for and against

Now, is there any drawback to async/await? Yes, there are a few, and they

differ across implementations.

The first drawback, in some languages, is stacks. If you’ve ever attempted to debug async code in Python or read through stack traces, you may have suffered. This used to be the case in JavaScript, too, although there have been improvements. This problem is not universal, as Rust, C# or F#, for instance, have proper async backtraces.

A second drawback is that async/await has a performance cost. CPU-bound code

written with async/await will simply never be as fast or as memory-efficient

as the equivalent synchronous code. The cost is higher in Python (which requires

polling and numerous allocations) or JavaScript (which supports wakeups but

requires even more allocations) than in Rust (which supports wakeups and can

often avoid allocations), but it exists regardless. Of course, if you’re writing

I/O-bound code, the cost of I/O is typically several orders of magnitude larger

than any async/await overhead, so you will probably not observe any difference.

But the biggest problem I’ve seen, by far, is that

most developers don’t seem to understand async/await.

So let me insist: async/await is a mechanism to easily make your code

asynchronous. It will make your code concurrent. It’s also a tool that

you can use to make your code non-blocking, but by itself,

it doesn’t make your code magically non-blocking. In particular, if you

call blocking code from async code, you will block.

Also, since every call to await might hide a context-switch to another

scheduled concurrent task, you will encounter most of the same problems as

with multi-threaded code: you will encounter data races, you will need locks

and possibly task-local storage and, to make things worse, your usual locks

won’t work – for instance, Rust’s tokio offers its own async implementation

of Mutex, RwLock, etc. In fact, these implementations are typically

slower than their thread counterparts.

And finally, async/await is a form of function coloring. You can’t wait

for an async function to wait without await and you can’t use await in

anything other than an async function. This means that you can’t pass an

async function as callback to a function that expects a sync function,

including your map, filter, fold or list comprehensions. This limits

code reuse and means that, once in a while, it will entirely prevent you

from using an API – I recently encountered the problem in Python, with a

scikit optimization function that required a sync callback, but the function

could only implemented as async, since it relied on further async functions.

It’s seldom a problem, but when it is, you are without a solution.

As I’ve briefly alluded to, async/await is also available in a bunch of other languages, including

F#, C#8, Haskell, Swift, Kotlin and (to some extent) C++. All languages that I’ve checked out, with the exception of JavaScript, compile async/await in the same manner as Python or Rust.

Async/await and non-blocking

Are async/await sufficient to make the code non-blocking? Well, I’ve already answered

that question above, but let me illustrate and explain.

If you look again at the desugared Python code, full of while True and yield None, you

will notice that await doesn’t yield control to the executor. In fact, you can run

code full of await and never context-switch to another task:

import asyncio

async def noop():

# Do nothing.

return

async def foo():

for i in range(100):

await noop() # This await will not make your foo() and bar() interleave.

print("foo")

async def bar():

for i in range(100):

await noop() # This await will not make your foo() and bar() interleave.

print("bar")

class Main:

def __init__(self):

self.task_1 = None

self.task_2 = None

async def run(self):

# Note: We need to prevent tasks from being garbage-collected.

self.task_1 = asyncio.create_task(foo())

self.task_2 = asyncio.create_task(bar())

main = Main()

asyncio.run(main.run())

# Prints 100x foo() then 100x bar()In other words, async/await, by themselves, will not make your execution

non-blocking.

Does Rust async/await make code magically non-blocking?

async fn noop() {

// Do nothing

}

async fn foo() {

for _ in 0..100 {

noop().await;

println!("foo")

}

}

async fn bar() {

for _ in 0..100 {

noop().await;

println!("bar")

}

}

#[tokio::main]

async fn main() {

let foo = tokio::task::spawn(foo());

let bar = tokio::task::spawn(bar());

let _ = foo.await;

let _ = bar.await;

}

// Prints `foo` and `bar` randomly interleaved.

Victory? Well, not quite. Tokio has detected that the computer has several cores

and uses multi-threading. But what happens if we remove support for multi-threading?

Let’s rewrite main:

fn main() {

// Force the configuration to use a single thread.

let runtime = tokio::runtime::Builder::new_current_thread()

.build()

.unwrap();

runtime.block_on(async {

let foo = tokio::task::spawn(foo());

let bar = tokio::task::spawn(bar());

let _ = foo.await;

let _ = bar.await;

})

}

// Prints 100x `foo` then 100x `bar`.

Yup, if we remove support for multi-threading, execution is sequential once

again. So no, in Rust either, async/await won’t make your code magically

non-blocking, although tokio::task::spawn might.

What about JavaScript?

async function noop() {

// Do nothing

}

async function foo() {

for(let i = 0; i < 100; ++i) {

await noop();

console.debug("foo");

}

}

async function bar() {

for(let i = 0; i < 100; ++i) {

await noop();

console.debug("bar");

}

}

function main() {

Promise.race([foo(), bar()])

}

// Prints `foo` `bar` `foo` `bar` `foo` `bar` ... 100x each.

Wait, what? In JavaScript, async/await makes code execute as concurrently

as one can hope?

Unfortunately, no.

If you recall, I mentioned that JavaScript has a double event loop, with micro-ticks

and ticks. foo and bar return instances of Promise and each call to await is

desugared to a Promise.then(). Some of the early prototypes of Promise had Promise.then

execute the code immediately, but developers were surprised, because they expected

await to, well, sleep. Other of the early prototypes called setTimeout, but this

meant that Promise and async/await could not be used naturally alongside some APIs such

as IndexedDB or Fetch, which committed their operations at the end of the current event, and

this proved also quite surprising for developers. So in the end, the standardized version of

Promise introduce the micro-ticks and Promise.then() automatically enqueues the closure

to be executed at the next micro-tick, but still in the same event. This meant that (unless

developers call setTimeout or wait for I/O), Promises cannot be used to automatically

chunkify work, but also made Promise.then() much faster to execute (and presumably

easier on the garbage-collector, I didn’t benchmark that).

So, in JavaScript async/await will encourage you to think about code as if it

were non-blocking, but it’s also not sufficient to make your code non-blocking.

Async and non-blocking I/O

As mentioned earlier, I/O can be very slow. HTTP calls or database calls can take unbounded amounts of time, and even disk I/O can slow things down considerably, especially on network shares.

So far, we have cheated by focusing on a purely mathematical function. But in real applications, you will need to deal with I/O and other blocking calls. After all, as mentioned previously, if you call blocking code from asynchronous code, well, you’re still blocking.

Luckily for you, your favorite async framework will usually provide non-blocking and ready-to-use async operations for these tasks. Let’s take a look at how these operations are made non-blocking.

There are typically two cases. In the first case, the operating system or

lower-layer libraries may already provide non-blocking calls for such operations,

e.g. epoll, io_uring, kqueue or I/O Completion Ports. Generally speaking,

these primitives will let applications or libraries:

- (un)register to be informed when an operation is possible;

- (un)register to be informed when an operation is complete;

- schedule an operation.

While the specifics are different across these primitives, the

general idea is not dissimilar to what we have shown, for instance,

earlier, when dividing fibonacci in chunks. In fact, the

implementation of async/await in Rust is optimized for such a

wakeup mechanism.

In practice, things are a bit more complicated. In fact, I don’t know of any async/await embedding

on top of io_uring in any language yet, because it doesn’t quite match this model. But

generally, that’s the idea.

In the second case, there is no non-blocking call for such operations. So we have to resort to threads. In such cases, the framework will:

- request a thread from a thread pool;

- dispatch the blocking call to the thread;

- once the blocking call is complete, wake the executor with the result.

In Python, this may look like:

class NonBlockingIOManager:

def __init__(self):

self.pool = ThreadPoolExecutor()

async def file_read(self, path: str) -> str:

"""

Non-blocking file read.

"""

def task():

with open(path) as file:

return file.read()

return await asyncio.get_event_loop().run_in_executor(

self.pool,

task)This is not ideal, but in the absence of a better solution, it works.

And in fact, in JavaScript, Promise was designed to help integrate results

coming from multiple threads/processes in such a manner (but without dealing

with threads itself).

What about Go?

Go is a bit of an outlier, and one of the few programming languages that do not

provide any kind of support for async/await, or any of the methods explored

so far. Nevertheless, this language is considered top-of-the-class (by some criteria)

for concurrent programming and web servers.

Where Python, C#, Rust, JavaScript or (so far) Java have decided to make user-level

concurrency explicit by relying on async/await or lower-level constructions,

Go’s designers have decided to make it implicit by provided a transparent M:N

scheduler.

func fibonacci(n uint32) uint64 {

if n <= 1 {

return 1

}

// (probably) no need to sleep manually

return fibonacci(n-1) + fibonacci(n-2)

}

type FibonacciEvent struct {

Arg uint32

}

func main() {

for {

e <- nextEvent;

switch event := e.(type) {

case FibonacciEvent:

go fibonacci(event.Arg)

}

}

}How does it work?

- The Go environment contains a pool of threads and pre-emptive scheduler.

- Launching a goroutine with

goallocates dynamically a new stack for this goroutine. - A goroutine runs on a thread from the thread pool.

- The Go scheduler may stop a goroutine at any moment, saving its program counter, task-local storage, etc. and replacing them with those of another goroutine.

- During compilation of C calls, the CGo compiler instruments the (non-blessed) C code with a little assembly that calls into the runtime environment.

- At runtime, when calling a C function, Go’s executor expects that the call is blocking, monitors how long the thread is blocked by the C function, and past some delay, removes the thread from the thread pool and replaces it with a new thread. Once execution of the C function has completed, the thread is not returned to the thread pool.

The immediate benefit comes in terms of resource usage/cognitive load. You can launch as many

tasks (“goroutines”) as you want, without having to deal with async/await or care about

memory usage, and these tasks will be executed concurrently, hopefully in parallel. Moreover,

Go functions are not colored by synchronicity. This means that interfaces or callbacks don’t

need to care whether they accept sync or async implementations, as there is no difference.

The immediate drawback is that, since concurrency is, to a large extent, implicit, it makes the code harder to reason about. I don’t think that this is a real problem, but I’ve seen it lead to developers writing concurrency-unsafe code and running it concurrently without paying attention. I think it’s more a problem of education than language.

One could argue that implicit concurrency makes it harder to optimize code, but since very few developers actually care about such level of code optimization, and since Go is one of the fastest languages around, I consider this a minor impediment. Of course, if you need more performance, use Rust.

There are real issues with goroutines, such as the implicit capture by reference or the ease of accidentally copying a lock instead of sharing it, but these are not due to the concurrency model or implementation.

Other languages with M:N scheduling

Now, I mention that Go is an outlier, but it’s by no mean the only language with M:N scheduling. Erlang has been the poster child for M:N scheduling since the 90s, Haskell has supported it since the early 2000s, Rust used to support it but removed the feature before 1.0, and Java is moving towards supporting it. I believe that OCaml supports it, too, but I’m still investigating that.

Rust removing the feature is an interesting case for why M:N scheduling is not always the solution.

This happened for a variety of reasons. It took some time for Rust to decide what kind of language it was. Initial versions of Rust offered garbage-collection, a M:N scheduler, built-in support for real-time programming. Each of these features was convenient, but made Rust harder to maintain or port to new architectures.

In particular, as it became clear that Rust was a really good language to write very low-level code, including firmware, memory allocators, embedded code and OS code, these features became blockers, as they relied on having an operating system and an allocator in the first place. To make Rust a true system language, these features had to leave (and be turned into libraries).

In addition, the Rust ethos prefers explicit costs to implicit ones. Many languages claim that (including Go and Python), but few languages actually follow up on this design principle (the ones I can think of are Rust, Zig, and of course C and C++). Having a M:N scheduler requires allocating and growing stacks implicitly, which goes against this ethos: allocating memory has a cost, imposes a choice of allocator on the user (which would make Rust much less usable for e.g. browser engines or game engines), and may fail.

Not only that, but Rust is designed to both call into C and be callable from C transparently and at zero implicit cost and zero implicit risk. However, to be able to call into C without blocking, you need a machinery comparable to what CGo provides. This machinery requires, once again, implicit allocations, hence hidden costs and hidden points of failure.

Also, M:N scheduling simply made no sense on some architectures. For instance, making M:N work doesn’t make sense on architectures that do not support dynamic memory allocation or (embedded) operating systems that do not support native threads.

And finally, M:N scheduling is complicated and was holding the language back. So the decision was made

to move M:N scheduling into a crate called libgreen. Then, as work on Future, then async/await

advanced, the Rust community decided that async/await served much better the Rust ethos of explicit

costs than M:N scheduling, and decision was taken to drop the feature.

None of this means that M:N scheduling is bad, incidentally. But it does illustrate that it is not always a desired feature.

What about OCaml?

OCaml is a very interesting case. It has supported the equivalent of Go’s channels since the late 1990s and, with version 5.0, it finally gained support for multicore. Recent benchmarks suggest that it can be actually much faster than Go on some tasks (I didn’t take the time to check these benchmarks, so don’t trust me on this).

In OCaml, using the Eio executor, our Fibonacci would look like:

let rec fibonacci (n:int) =

if n <= 1 then (

1

) else (

Fiber.yield(); (* take a break *)

fibonacci (n - 1) + fibonacci (n - 2)

)this implementation sits somewhere Go’s and Python/Rust/JavaScript. As Go, it doesn’t need async

or await. As Python/Rust/JavaScript/C++, it expects an explicit operation (here Fiber.yield())

to allow cooperative context-switching.

But in fact, OCaml is its own beast. For one thing, as far as I understand, the OCaml compiler

doesn’t go through any compilation step specific to multi-tasking, either to compile async/await

into yield or Promise or anything similar, or to introduce implicit cooperative context-switching.

In fact, the above code can run, without change or recompilation, either as a backgrounded, non-blocking

task, or as a blocking task, depending on the context.

How does this work?

Well, the implementation of Fiber.yield() actually raises an effect. Effects are almost identical

to exceptions, with one key difference: where exception handlers can either handle the exception or

let it propagate, effect handlers can also decide to resume the work at the site the effect was

raised. In a way, effects are a generalization of both exceptions (they add the ability to resume)

and generators (they add the ability to cross an arbitrary stack depth).

This is an extremely powerful mechanism that can be used to provide context-customizable retries, logging, mockable I/O, etc. and of course concurrency. For instance, we could write:

try some_function() with

| effect Yield continuation -> (

(* Proceed in the next tick *)

enqueue_event (Continue continuation)

)or, if we don’t want concurrency

try some_function() with

| effect Yield continuation -> (

(* Proceed immediately *)

continuation ()

)… and we could even have both on the same stack to force a function to run without concurrency locally (e.g. for performance reasons) despite running in a concurrent or even parallel executor.

It’s a very interesting mechanism that I’m still test-driving. I’m not sure about the performance

implications of the continuation. As far as I understand, context-switching between two fibers

is (or can be) as fast as in Go, but stack management is more complicated, as the code executed

in the try needs its stack, the code executed in the effect expression needs its stack, both

of these stacks stack on top of the code that calls the entire try ... with ... expression, and

continuation() require its own stack.

There’s also the issue that, much like exceptions, effects don’t show up in the type

of a function, which can lead to accidentally uncaught effects. Maybe an updated ocamlexn could

solve that?

Note: As pointed out by /u/phischu on Reddit, there are of course several more experimental languages that expand this feature, including Lexa and Effekt.

Which tool should I use?

Threads

- Threads are good, but tricky to use. By all means, please learn how to use them correctly and safely.

- On most platforms, threads are needed at some level to handle CPU-heavy tasks.

- On most platforms, threads are needed at some level to make some I/O non-blocking.

- If you are in a position where millisecond matters, you will need to profile your thread switches.

- Don’t forget to check whether you are constrained by a GIL.

Processes

- Processes are good, but heavy.

- You may need to use them for security/sandboxing/crash isolation.

- They can be useful for performance, typically in GIL-constrained languages, but the cost of communications can hurt a lot, so you’ll have to benchmark carefully.

Chunkification

- Chunkification makes your code really hard to read.

- Chunkification always makes your code slower.

- You can often avoid doing it manually, e.g. by using

async/await6.

Async/await

async/awaitis useless if you perform blocking calls, whether they’re blocking on CPU or on I/O.- if you own the CPU-bound blocking code, you can use

async/awaitto chunkify it cleanly into non-blocking code. - otherwise, the only generic way to turn blocking calls into non-blocking calls is to use threads (or sometimes, processes).

- once you have non-blocking I/O,

async/awaitshines at using your CPU efficiently in tasks that are constrained by I/O. async/awaitis function coloring, which may prevent you from using it.

M:N scheduler and variants

- I can’t think of any good reason to not take advantage of a M:N scheduler if it’s available.

- The risks are almost identical to multi-threading, so please take time to learn how to write thread-safe code.

Conclusions

I hope that these few pages of code have helped clarify why async/await was designed, what

it is good for and what it won’t do for you, as well as a few alternatives.

If I find the opportunity, I’ll try and write a followup with benchmarks.

-

Other than Unix command-line tools. ↩︎

-

Sure, it will generally open in <16ms. But once in a while, it won’t, especially if it’s a network mount (it can take several seconds), or if your file system is already quite busy. ↩︎

-

As I write this, downloading the

index.htmlfrom my blog (whithout any other resource) takes about 30ms. That’s roughly the duration of two frames. ↩︎ -

Among other things, Go stops being type-safe in presence of data race conditions. ↩︎

-

And just as I write that, I see the ChangeLog for Python 3.14, which suggests that free threading is moving on to Phase II. Who knows, maybe it will be faster? ↩︎

-

/u/asb kindly pointed out on Reddit that there are efforts to decrease the cost of scheduling: https://lkml.org/lkml/2020/7/22/1202 . ↩︎ ↩︎

-

It supports a multi-process paradigm, which may be implemented with threads, but that’s a bit different. ↩︎

-

In fact, F# was the first mainstream language to feature

async/await, I believe, followed by C#. ↩︎

Copyright: Attribution-NonCommercial-ShareAlike 4.0 International (CC BY-NC-SA 4.0)

Author: David Teller

Posted on: July 8, 2025